Access Required

This case study is protected due to confidentiality agreements and is intended only for professional viewing.

To request access, please email leahyangmsx@gmail.com.

2025

JUNO AI

I led design for JUNO, an AI-powered litigation platform built to redefine how personal injury law firms manage their cases. From the first sketches to a fully functional SaaS product, I shaped the core user experience, design system, and interface logic that turned our early prototype into an investor-ready platform.

This remains one of my proudest projects—an opportunity to design something that didn’t exist before in the legal space. It challenged me to translate complex workflows into clarity, and to build trust between lawyers and AI through thoughtful design.

Role

Design Owner

Team

1 product manager

1 designer

Juno Engineering Team

Skills

Design System

Competitive Analysis

Prototyping

Agent Interaction Design

Branding/Visual Design

Duration

6 months

Official Website

junolaw.ai

Designing an Agentic System for Litigation

The rise of agentic systems marks a turning point in how AI moves from assistance to autonomy. In legal technology, this transition is especially meaningful. Litigation is a domain defined by complex dependencies — facts, filings, arguments, and deadlines all evolving in parallel. Traditional LLM integrations can answer questions or draft text, but they remain passive participants. They don’t understand the case as a system.

At Juno, we’ve already built the foundation: a fully functional litigation operating system where law firms manage intake, documents, tasks, and drafting in one connected workflow. On top of this, we aim to make this system truly intelligent — by embedding a network of AI agents that can understand the case context, plan next steps, and execute work alongside the legal team.

From Tools to Teammate

We envision Juno as a collaborator within the case workflow — not isolated features. Instead of merely generating content, it perceives the relationships between facts, filings, and deadlines, then acts within that structure to help the case move forward. This shift transforms AI from a point solution into an active participant in the litigation lifecycle.

Our goal is to design a cohesive agentic system that supports every stage of litigation: helping lawyers discover insights, suggest and manage tasks, draft legal documents, and validate outputs with clarity and traceability. Each agent operates with shared context from the case knowledge base, ensuring continuity and trust across the user experience.

Designing for Agency and Oversight

Building this system requires balancing autonomy with accountability.

For repetitive, procedural tasks, agents act as intelligent automation, completing multi-step workflows while providing visibility through progress indicators and transparent logs.

For reasoning and drafting, agents become intelligent collaborators, surfacing insights, explaining rationale, and inviting user direction.

The design challenge is not to show “what AI can do,” but to ensure users always understand what it’s doing, why it’s doing it, and how to stay in control.

Through this approach, Juno moves beyond adding AI features into building an agentic litigation ecosystem — one where context, reasoning, and human judgment work together to deliver real outcomes.

CASE STUDY #1

Building Knowledge Base for an Incoming Case - AI Intake

In litigation, every case begins as chaos — hundreds of unstructured documents that must be organized before real work can start. Juno’s AI Intake transforms that step into intelligence. Instead of treating uploads as a passive feature, it actively reads, labels, and organizes each file, identifying parties, facts, and deadlines to build a structured case knowledge base.

The design goes beyond automation: it focuses on trust and transparency. While lawyers are responsible for reviewing each document on file, on case details, they can see exactly which documents AI referenced, validate extractions in context, and track every action through an auditable log. The result is a reliable, explainable foundation that supports drafting, research, and planning — the first step in making Juno a truly agentic litigation system.

Design Evolution — From Extraction to Collaboration

Version 1 — The Founders’ Concept (Structured but Detached)

The initial concept, developed before my involvement, proposed a linear flow:

However, it introduced two major usability flaws:

Disconnection between questionnaire and documents - Users reviewed extracted answers without any visibility into where the data came from. Also, there was no link between the information being confirmed and its source files.

Constrained interface and poor visibility - The entire flow was contained within a pop-up window, which severely limited space for document review. Although inline viewing technically existed, the preview area was too small for practical use, forcing users to scroll or open files separately. This layout made the experience cramped and disrupted the review flow.

As a result, users couldn’t fully trust or efficiently verify AI outputs — the system presented information without adequate room for context or confirmation.

INSIGHTS

Automation without transparency creates friction and mistrust.

Users need to see and understand how the AI reached its conclusions.

Version 2 — My Redesign: Context-Aware, Label-Driven Intake

To solve these, I redesigned the flow around document context instead of a questionnaire.

In the second version, the intake process was redesigned as a full-page experience instead of a pop-up. This change provided the necessary screen space for side-by-side document viewing and review, greatly improving clarity and reducing friction.

Here, each label carried its own extraction schema — a Police Report extracted accident details, a Medical Record extracted diagnoses and dates, and a Demand Letter captured damages and requested amounts.

This version made the process modular, transparent, and contextual, allowing the knowledge base to build itself dynamically as each document was reviewed.

After all documents were processed, AI produced a concise intake summary that reported how many files were labeled successfully, flagged files requiring review, and suggested follow-up tasks such as “Review missing medical record,” “Draft demand letter,” or “Verify accident details.”

This summary gave users immediate visibility into what the AI accomplished and what still needed human attention, making the entire process feel traceable and actionable.

The team quickly recognized this as a major improvement — it mirrored how real legal assistants process evidence.

However, our AI models weren’t yet capable of performing label-specific extractions across multiple document types with consistent accuracy. The concept was validated, but full implementation wasn’t technically feasible at the time.

Also, AI-suggested tasks appeared only on the final summary page, disconnected from their source. Users saw what to do next but not why. This lack of context led to the next iteration.

INSIGHTS

Context-first design was the right direction — but the AI needed to catch up.

The next iteration would need to balance structure with contextual verification.

Version 3 — The Hybrid Model (Final Design)

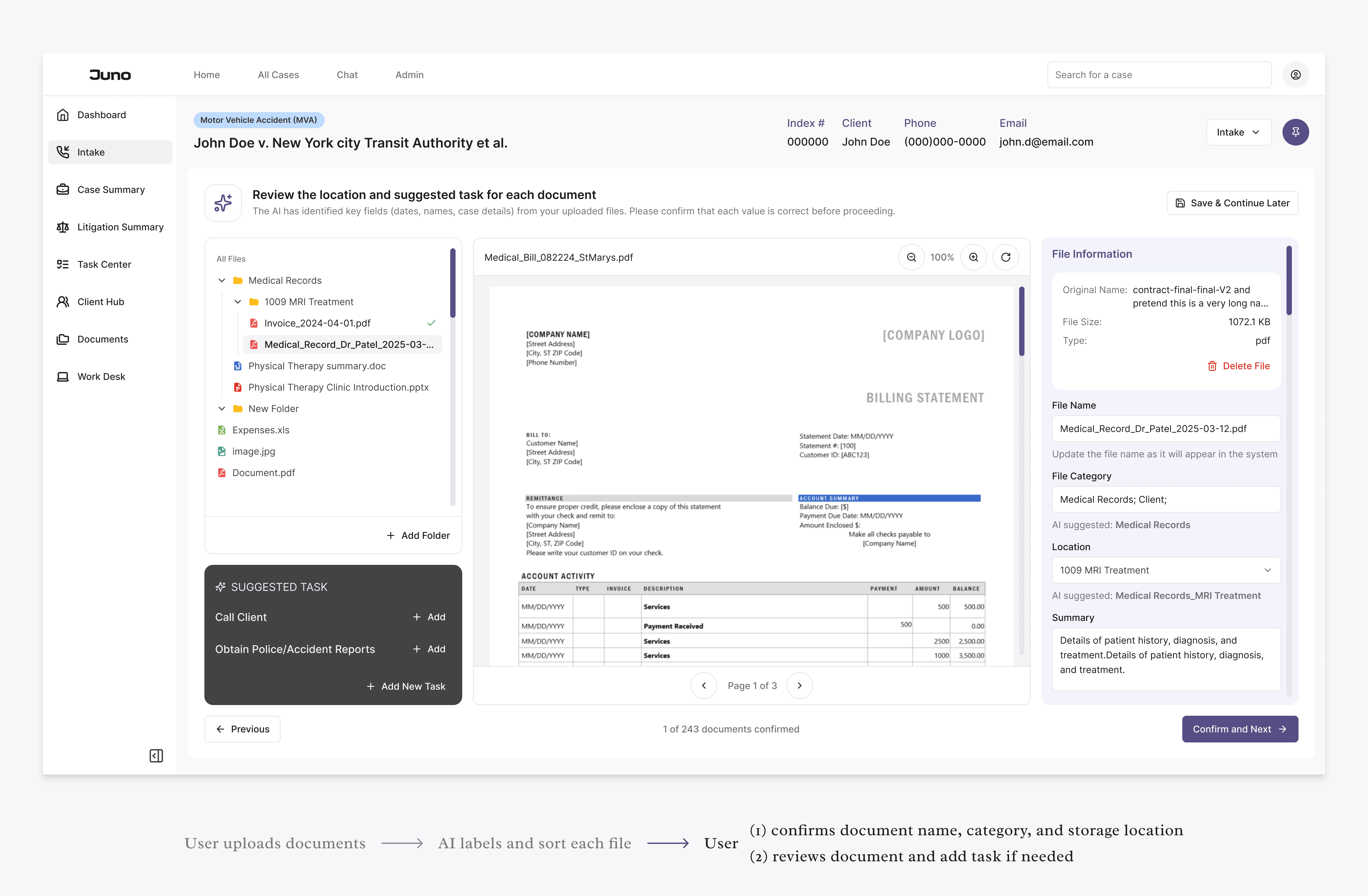

The final version merges the best of both worlds — document-driven intake with guided structure.

In this iteration, we identified that task discovery naturally occurs during document review—the moment when users engage most deeply with the case materials. To support this behavior, we deliberately merged document review, information confirmation, and task suggestion into a single integrated step.

As users confirm each document’s name, category, and storage location, Juno simultaneously analyzes the content and surfaces AI-suggested tasks (e.g., “Request updated medical record,” “Schedule deposition for listed witness”).

This design ensures that new tasks emerge directly from evidence and verified context, creating a more intuitive and action-oriented intake experience.

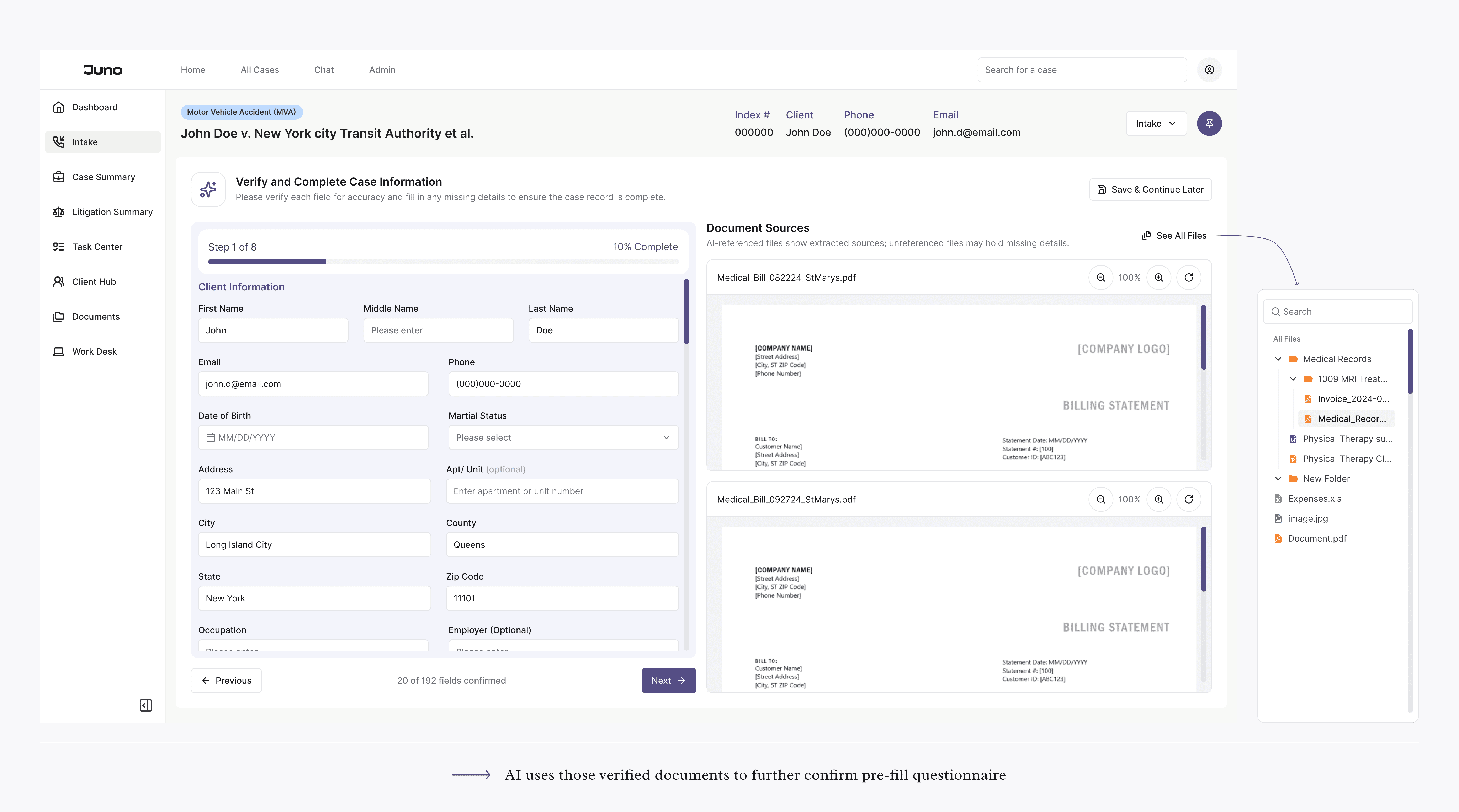

Here, the questionnaire returns, but as a guided review interface, not a rigid framework.

Each section of the questionnaire now displays AI-referenced documents, allowing users to trace every answer to its source. Users can add or correct details directly and proceed confidently.

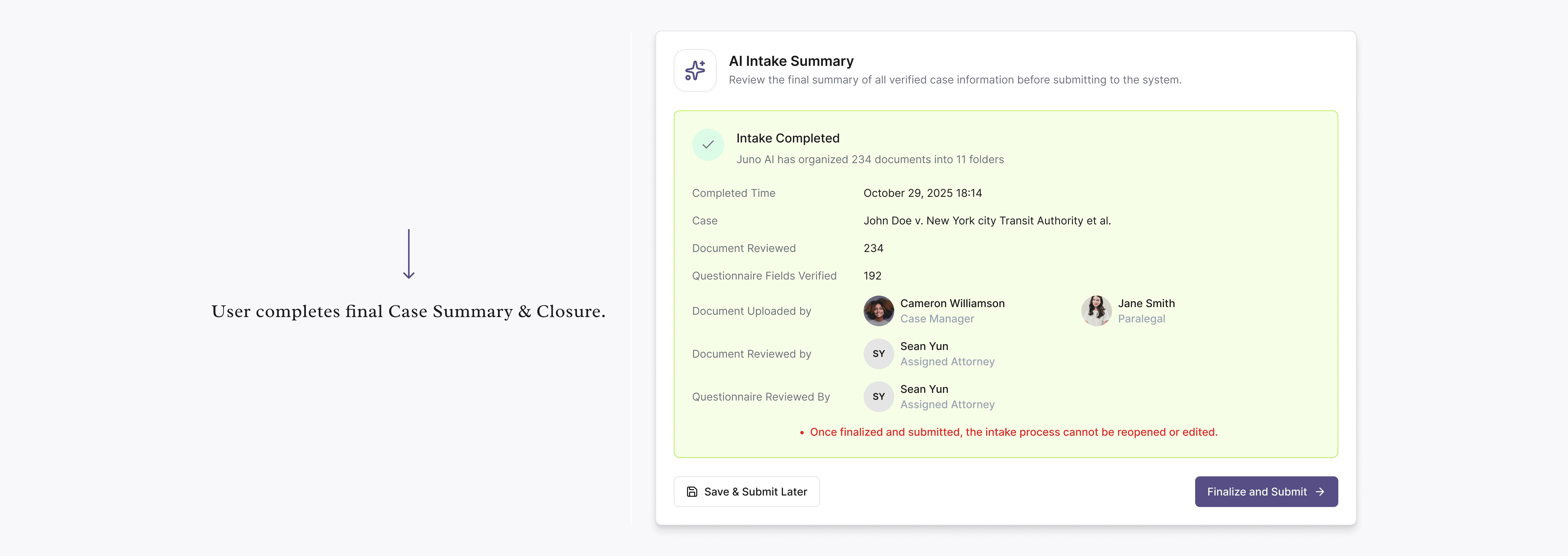

A Summary Page closes the loop — showing what AI did automatically, team members who participated in the review, and what has been recorded into the knowledge base.

This design provides clarity, control, and closure.

AI handles the heavy lifting, while users retain oversight — a true balance of efficiency and accountability.

INSIGHTS

The final design transforms intake from a one-way extraction process into a collaborative verification experience.

AI learns from documents; users validate and guide it — just like working with a junior paralegal.

Design Principles Learned

Transparency builds confidence.

Showing document sources and end-of-flow summaries makes AI results trustworthy.

Automation needs context.

Structured guidance helps AI output stay understandable and reviewable.

Balance ambition with capability.

Design vision must scale with real AI performance — ambitious but grounded.

Why This Matters - The Impact

Industry studies show that traditional litigation intake is highly time-consuming: a paralegal typically spends 5–7 hours reviewing, naming, categorizing, and extracting information from about 100 documents before a case can begin (ABA Legal Technology Survey, 2023; Thomson Reuters Legal Ops Benchmark, 2022).

With Juno AI Intake, the same workload is completed in under two minutes of automated processing, producing a structured, ready-to-review questionnaire. Even with 30–45 minutes of human verification, this represents an 85–90% reduction in onboarding time, turning what was once a full-day administrative task into a single, guided review session.